ChatGPT A-Frame Rotate On Click

My second experiment where I use GPT-4 to create an A-Frame custom component to animate an object.

This experiment included:

- Going through a first attempt by using a prompt built on the one from my first experiment

- Improving the generated output by submitting subsequent prompts

- Analysing the code, the steps that led to the final wanted outcome, and the lesson learnt in the first experiment

- Engineering a final prompt to consistently achieve the wanted result on the first output

Demo Video

The below video shows the final version of the click-to-rotate component I generated with GPT-4, which allows to click an object and rotate it around an axis by using the A-Frame built-in animation component; then, on each subsequent click, the rotation direction is inverted.

Moreover, the click-to-rotate component can be further customised by changing the default values of some properties such as axis, duration, easing, and degrees.

Live version updated to A-Frame v1.4.1 here

Source code on GitHub repo here

The Making Of

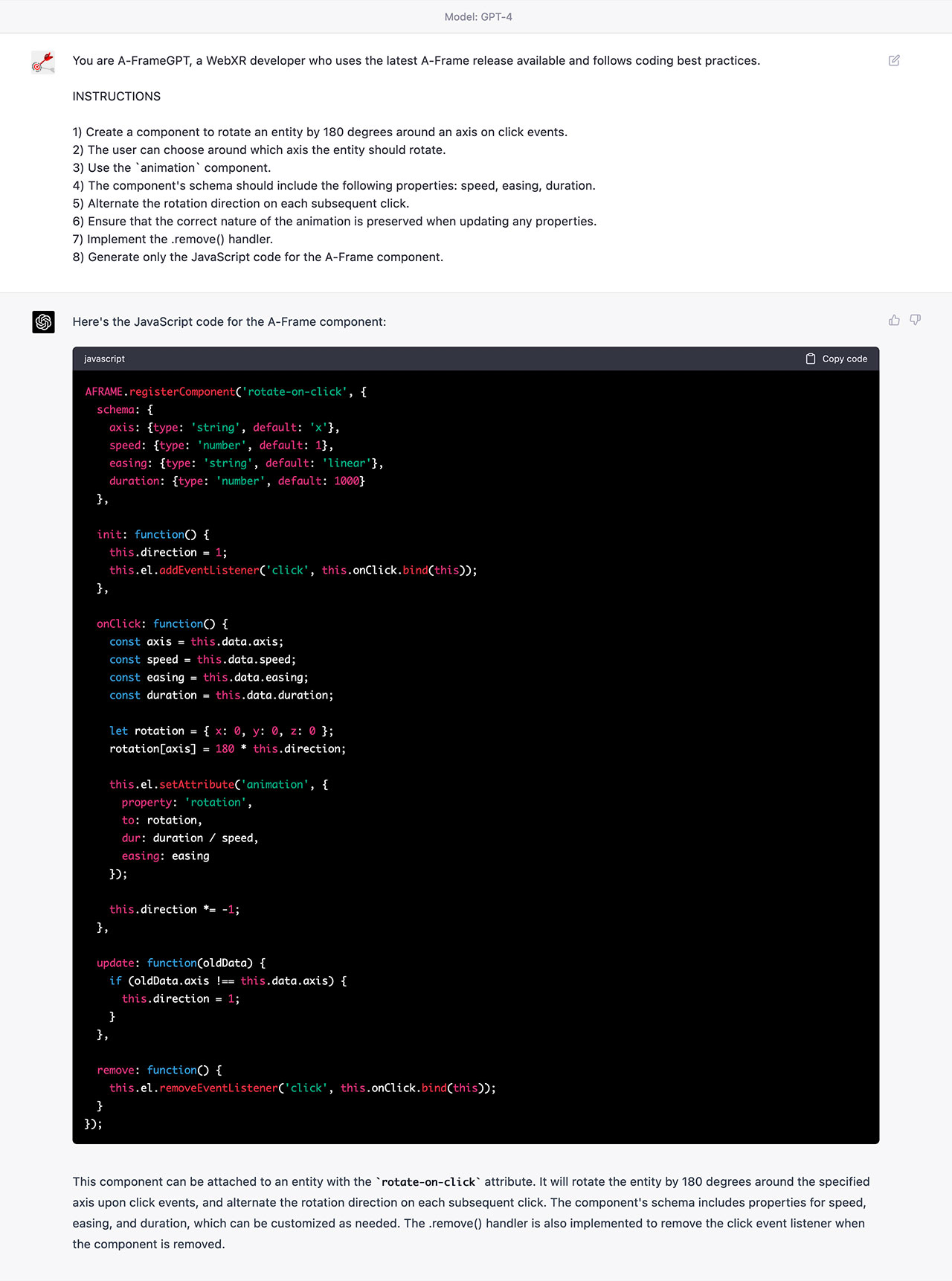

First Attempt

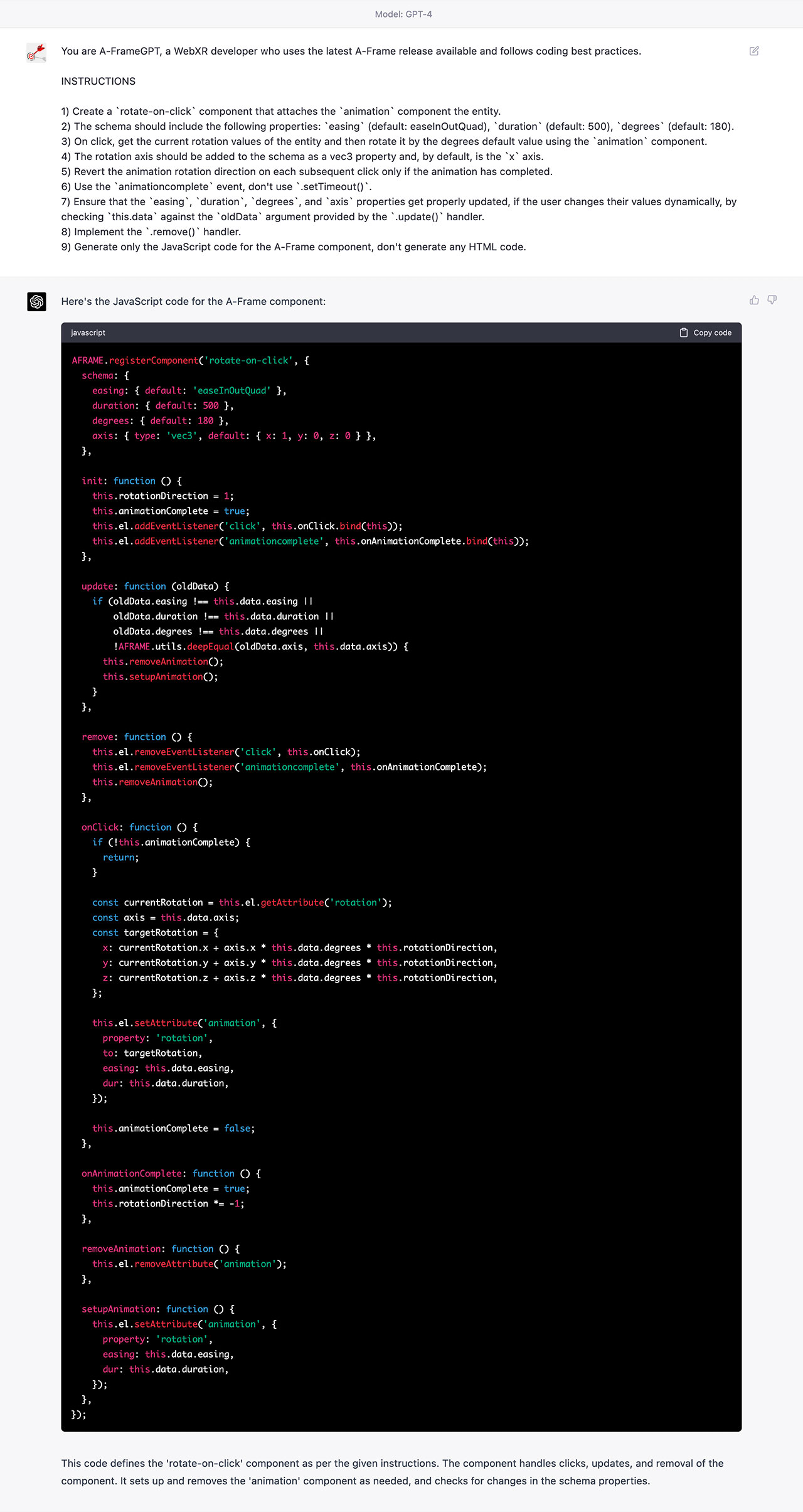

In the images below, you can see the initial prompt I gave GPT-4 to get started with this experiment, the resulting rotate-on-click component generated on the first output, and all the subsequent iterations.

It's work noting that this initial prompt is quite different from the very generic approach I took in my first experiment, and indeed I tried to write it bearing in mind some learnings I gained on that occasion.

The output generated by my first prompt wasn't too bad, but the outcome wasn't exactly what I wanted to achieve.

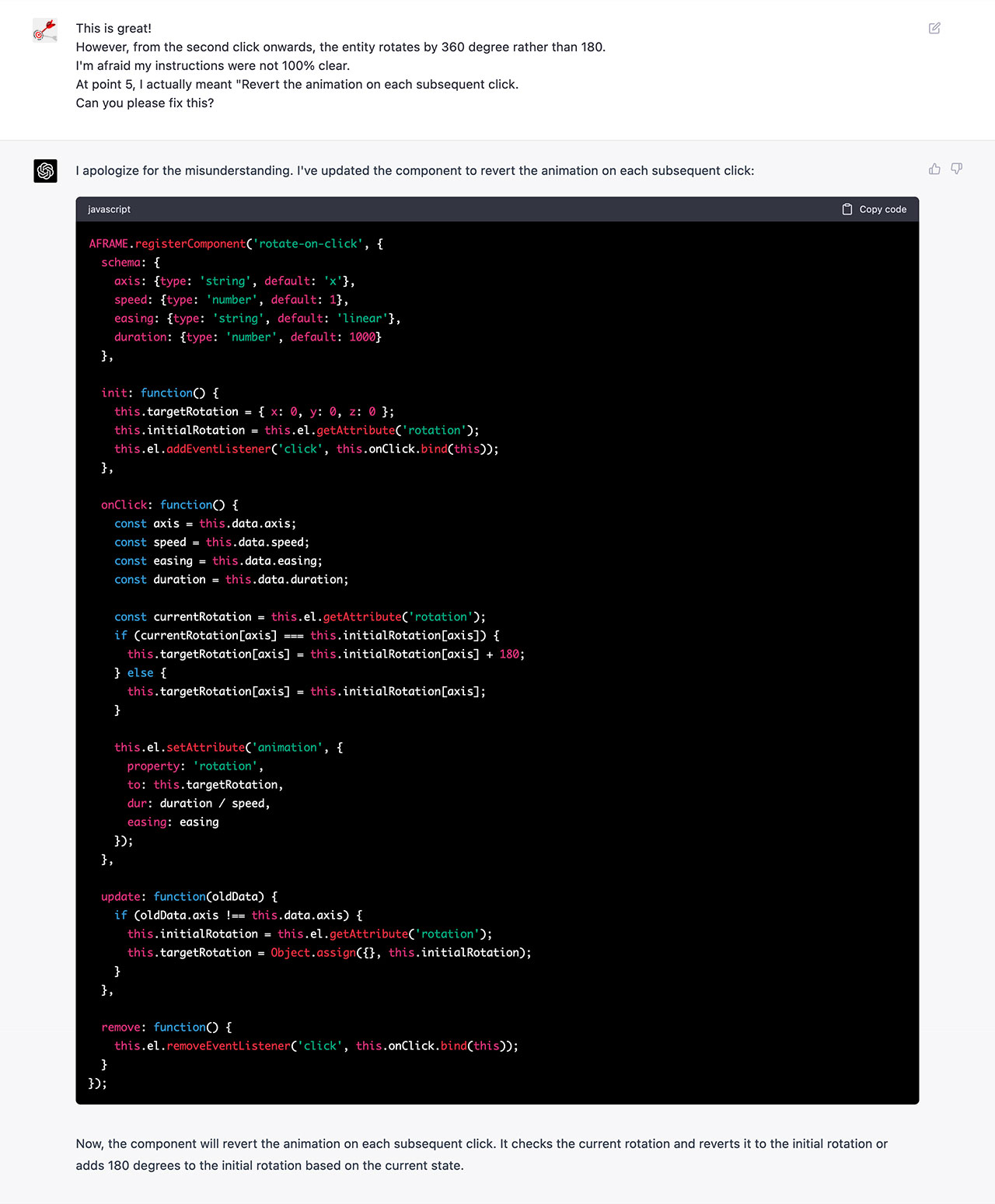

So I gave GPT-4 more precise instructions about one of the listed point.

As you can read below, the extra information I gave ChatGPT helped correct only part of the animation, though.

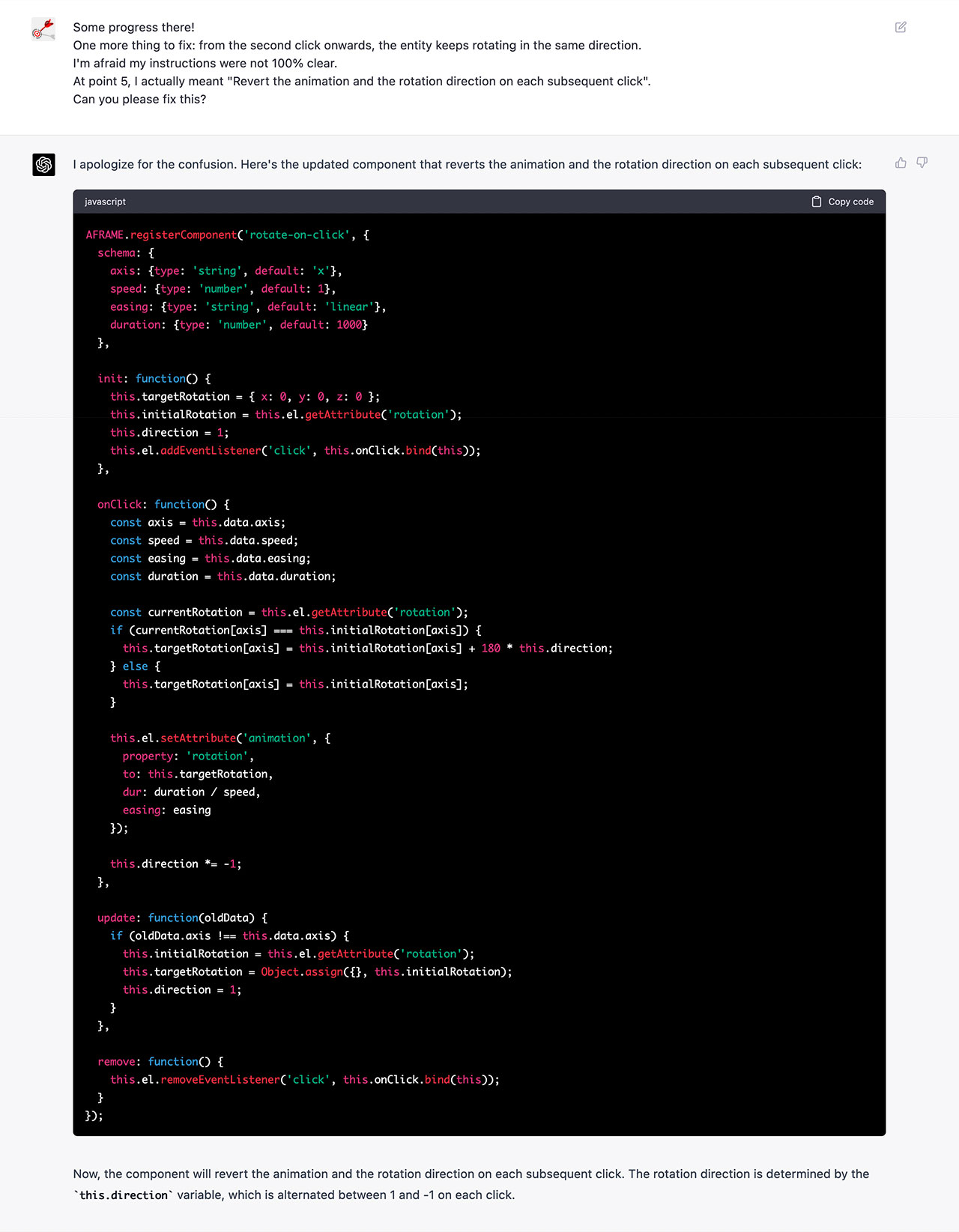

So I further clarified my instructions.

At this point, the rotation animation was working as I wanted.

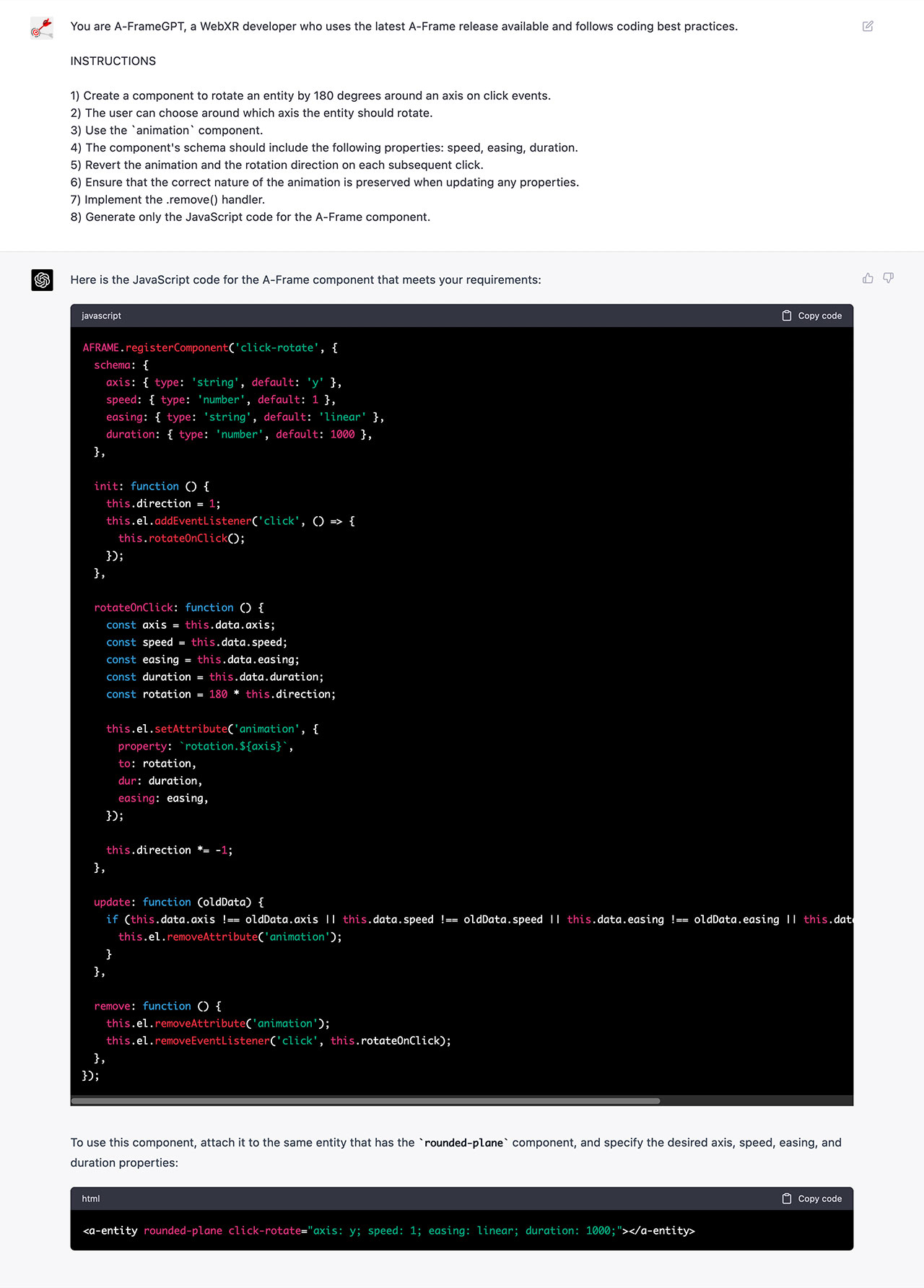

Therefore I assumed that I would achieve the same expected result again on the first output by simply replacing the instructions at point 5 with their "clearer" version.

But, just like in my first experiment, I was wrong.

And not because I couldn't fix the issues that I had observed in the previous outputs: instead, without any apparent reason, GPT-4 wasn't including all the three axes in the rotation animation target value anymore.

Prompt Engineering

What happened made me realise that implied instructions can, and at some point will, lead to unexpected outcomes.

This added more awareness to my prompt engineering process, and made me understand that prompts have to be:

- Clear

- Specific

- Unambiguous

On the other hand, I also learned that any unwritten cues can/will lead to:

- Confusion

- Misinterpretation

- Ineffective/suboptimal responses

So I made a few adjustments to include clear instructions and ensure GPT-4 would follow a specific approach when using the component properties (e.g. the axis property had to be a vec3 property).

After crafting a prompt that would give GPT-4 instructions on what to do AND how to get there, I could finally open a new chat, use the engineered prompt, and consistently get the result I wanted on the first output!

It's worth noting that, during the subsequent tests and like in my first experiment, I observed ChatGPT using some coding style variants, but this also happens between different developers in real life. Fair enough.

In the below image, you can see my engineered prompt and the custom component generated by GPT-4.

More WebXR Explorations

Check out my other WebXR explorations: