Immersive Web Emulator "Point At" Feature

An experiment to provide feedback on the Immersive Web Emulator v1.3.0, released on June 2023.

If you are not familiar with it, the Immersive Web Emulator developed by Meta Reality Labs is a crucial and extremely useful tool for testing and iterating on WebXR experiences without a physical XR device.

After giving it a try, I could appreciate some of the great key features in v1.3.0, such as:

- Accurate simulation of controller input, including binary input (buttons) and analog input (trigger, grip, joysticks)

- Accurate simulation of hand input, including built-in hand poses and pinch gesture control

- Keyboard action mapping for added efficiency

- Keyboard input relay

And since the tool is still in development, it's reasonable to believe that it will get better and better.

With all this said and from my personal experience ATTOW (June 2023), the Immersive Web Emulator still suffers from some weak points that impact the UX, though.

Problem

Currently, developers face a somewhat complex task each time they need to test for intersection and interaction with the objects in the WebXR scenes they are building because they have to manually adjust the VR controller's position and orientation in the 3D viewport to point at the different objects.

Therefore, due to the inherent limitations of working on a 2D screen, transform manipulations in the interactive 3D viewport often require multiple adjustments, slowing down the process and potentially causing frustration.

This time-consuming process poses a UX challenge, deterring the intuitive and user-friendly interface Meta Reality Labs strives for with the Immersive Web Emulator.

Solution

There's an evident need for a more efficient way to simulate the VR controllers' transform manipulation, and this brought me to a possible solution to the problem: the "Point At" feature.

With this feature, developers can intersect and interact with the different objects in the WebXR scene in no time and with minimal effort: instead of manually adjusting the VR controller's position and orientation in the 3D viewport, developers can type the object's ID selector in a dedicated input field and hit a button for the software to apply the required transform manipulation automatically.

Demo Video

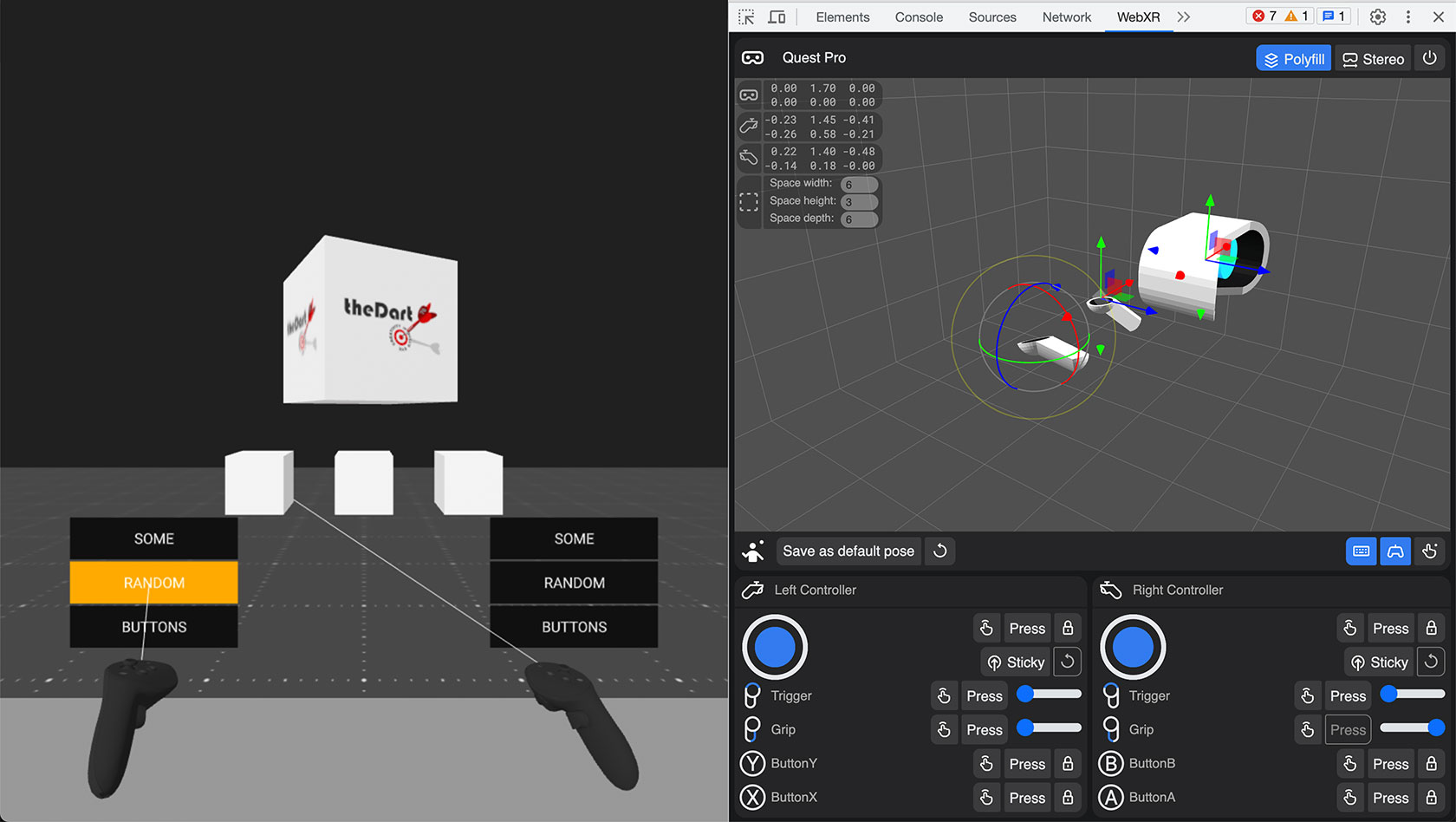

This demo video shows a rough prototype of the "Point At" feature that aims at improving the Immersive Web Emulator's overall UX and efficiency.

If you want to try the prototype, you can find the live version here.

Prototype details on Twitter

Hi-Fi Mockup

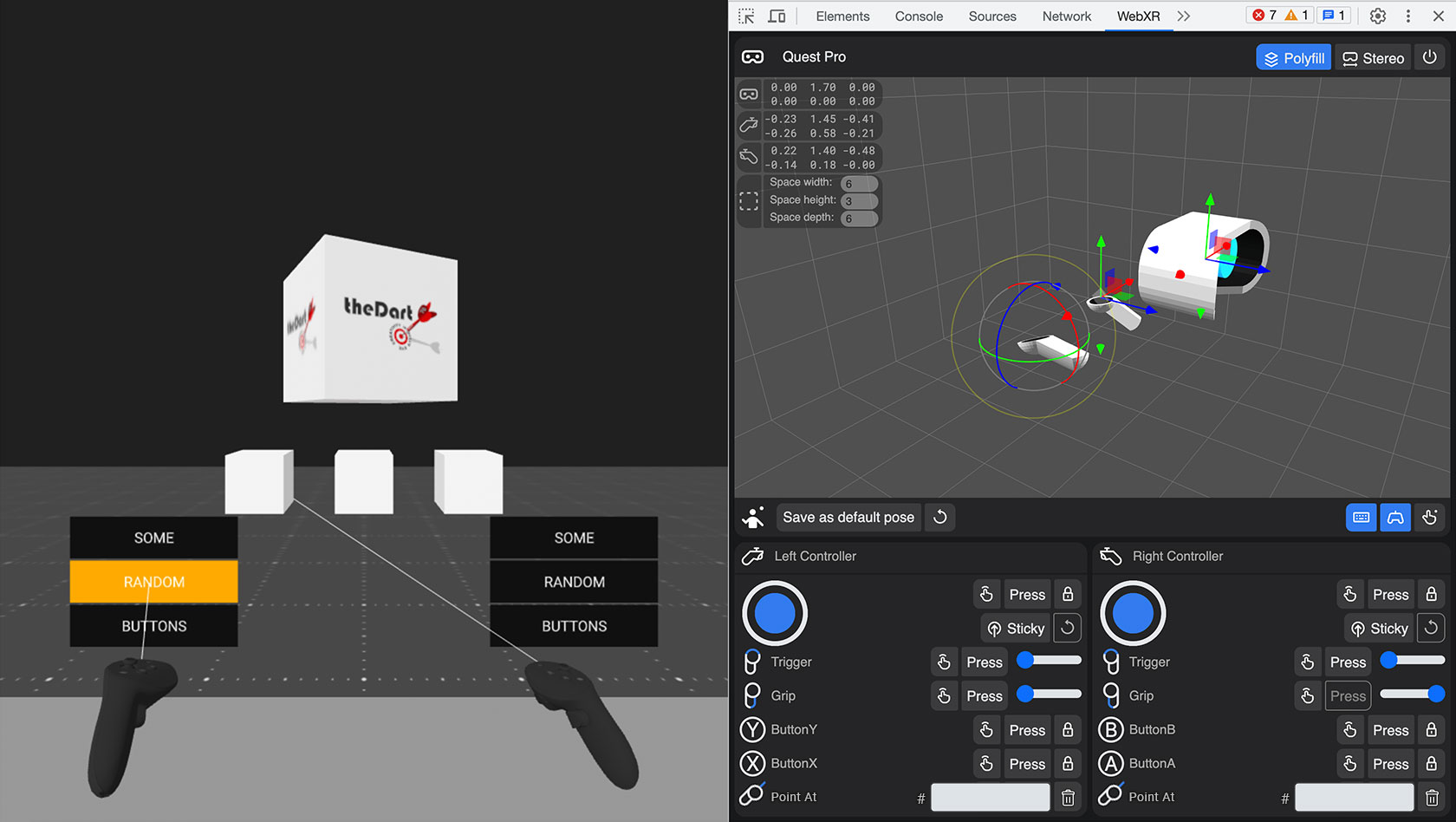

The mockup below shows the updated Immersive Web Emulator's UI including the "Point At" feature and its icons at the bottom of the controller input panel.

The Making Of

Rough Prototype

To create the "Point At" feature prototype I used:

- A combination of HTML, JavaScript, and CSS to build a simple web page that includes some info, a basic UI, and the required logic to interact with the embedded WebXR scene

- The A-Frame framework to create the WebXR scene and show how the VR controllers (both in the scene the developer is building and in the Immersive Web Emulator's 3D viewport) would behave when using the "Point At" feature to test for intersection and interaction

NOTE: WebXR frameworks/game engines like A-Frame, Three.js, Babylon.js, and PlayCanvas work differently. Therefore the primary goal of this prototype is to show the "Point At" feature, not to tell how to implement it.

Hi-Fi Mockup

To create the Hi-Fi mockup I simply edited in Photoshop a screenshot of the Immersive Web Emulator's UI v1.3.0.

More WebXR Explorations

Check out my other WebXR explorations: